Introduction

Diagnostic bioinstrumentation is used routinely in clinical medicine and biological research for measuring a wide range of physiological variables. Generally, the measurement is derived from sensors or transducers and further processed by the instrument to provide valuable diagnostic information. Biomedical sensors or transducers are the main building blocks of diagnostic medical instrumentation found in many physician offices, clinical laboratories, and hospitals. They are routinely used in vivo to perform continuous invasive and non-invasive monitoring of critical physiological variables, as well as in vitro to help clinicians in various diagnostic procedures. Similar devices are also used in nonmedical applications such as in environmental monitoring, agriculture, bioprocessing, food processing, and the petrochemical and pharmacological industries.

Increasing pressures to lower health care costs, optimize efficiency, and provide better care in less expensive settings without compromising patient care are shaping the future of clinical medicine. As part of this ongoing trend, clinical testing is rapidly being transformed by the introduction of new tests that will revolutionize the way physicians will diagnose and treat diseases in the future. Among these changes, patient self-testing and physician office screening are the two most rapidly expanding areas. This trend is driven by the desire of patients and physicians alike to have the ability to perform some types of instantaneous diagnosis right next to the patient and to move the testing apparatus from an outside central clinical laboratory closer to the point of care.

Generally, medical diagnostic instruments derive their information from sensors, electrodes, or transducers. Medical instrumentation relies on analogue electrical signals for an input. These signals can be acquired directly by biopotential electrodes—for example, in monitoring the electrical signals generated by the heart, muscles or brain, or indirectly by transducers that convert a nonelectrical physical variable such as pressure, flow, or temperature, or biochemical variables, such as partial pressures of gases or ionic concentrations, to an electrical signal. Since the process of measuring a biological variable is commonly referred to as sensing, electrodes and transducers are often grouped together and are termed sensors.

Biomedical sensors play an important role in a wide range of diagnostic medical applications. Depending on the specific needs, some sensors are used primarily in clinical laboratories to measure in vitro physiological quantities such as electrolytes, enzymes, and other biochemical metabolites in blood. Other biomedical sensors for measuring pressure, flow, and the concentrations of gases, such as oxygen and carbon dioxide, are used in vivo to follow continuously (monitor) the condition of a patient. For real-time continuous in vivo sensing to be worthwhile, the target analytes must vary rapidly and, most often, unpredictably.

Sensor Classifications

Biomedical sensors are usually classified according to the quantity to be measured and are typically categorized as physical, electrical, or chemical, depending on their specific applications. Biosensors, which can be considered a special subclassification of biomedical sensors, are a group of sensors that have two distinct components: a biological recognition element, such as a purified enzyme, antibody, or receptor, that functions as a mediator and provides the selectivity that is needed to sense the chemical component (usually referred to as the analyte) of interest, and a supporting structure that also acts as a transducer and is in intimate contact with the biological sensing sensed by the biological recognition element into a quantifiable measurement, typically in the element. The purpose of the transducer is to convert the biochemical reaction into the form of an optical, electrical, or physical signal that is proportional to the concentration of a specific chemical. Thus, a blood pH sensor is not considered a biosensor according to this classification, although it measures a biologically important variable. It is simply a chemical sensor that can be used to measure a biological quantity.

Sensor Packaging

Packaging of certain biomedical sensors, primarily sensors for in vivo applications, is an important consideration during the design, fabrication, and use of the device. Obviously, the sensor must be safe and remain functionally reliable. In the development of implantable biosensors, an additional key issue is the long operational lifetime and biocompatibility of the sensor. Whenever a sensor comes into contact with body fluids, the host itself may affect the function of the sensor, or the sensor may affect the site in which it is implanted. For example, protein absorption and cellular deposits can alter the permeability of the sensor packaging that is designed to both protect the sensor and allow free chemical diffusion of certain analytes between the body fluids and the biosensor. Improper packaging of implantable biomedical sensors could lead to drift and a gradual loss of sensor sensitivity and stability over time. Furthermore, inflammation of tissue, infection, or clotting in a vascular site may produce harmful adverse effects. Hence, the materials used in the construction of the sensor’s outer body must be biocompatible, since they play a critical role in determining the overall performance and longevity of an implantable sensor. One convenient strategy is to utilize various polymeric covering materials and barrier layers to minimize leaching of potentially toxic sensor components into the body. It is also important to keep in mind that once the sensor is manufactured, common sterilization practices by steam, ethylene oxide, or gamma radiation must not alter the chemical diffusion properties of the sensor packaging material.

Sensor Specifications

The need for accurate medical diagnostic procedures places stringent requirements on the design and use of biomedical sensors. Depending on the intended application, the performance specifications of a biomedical sensor may be evaluated in vitro and in vivo to ensure that the measurement meets the design specifications.

To understand sensor performance characteristics, it is important first to understand some of the common terminology associated with sensor specifications. The following definitions are commonly used to describe sensor characteristics and selecting sensors for particular applications.

Sensitivity

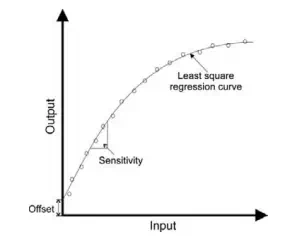

Sensitivity is typically defined as the ratio of output change for a given change in input. Another way to define sensitivity is by finding the slope of the calibration line relating the input to the output (i.e., Output/Input), as illustrated in Figure 10.1. A high sensitivity implies that a small change in input quantity causes a large change in its output

FIGURE 10.1 Input versus output calibration curve of a typical sensor.

For example, a temperature sensor may have a sensitivity of 20 mV/C; that is, the output of this sensor will change by 20 mV for 1C change in input temperature. Note that if the calibration line is linear, the sensitivity is constant, whereas the sensitivity will vary with the input when the calibration is nonlinear, as illustrated in Figure 10.1. Alternatively, sensitivity can also be defined as the smallest change in the input quantity that will result in a detectable change in sensor output.

Range

The range of a sensor corresponds to the minimum and maximum operating limits that the sensor is expected to measure accurately. For example, a temperature sensor may have a nominal performance over an operating range of -200 to +500 C.

Accuracy

Accuracy refers to the difference between the true value and the actual value measured by the sensor. Typically, accuracy is expressed as a ratio between the preceding difference and the true value and is specified as a percent of full-scale reading. Note that the true value should be traceable to a primary reference standard.1

Precision

Precision refers to the degree of measurement reproducibility. Very reproducible readings indicate a high precision. Precision should not be confused with accuracy. For example, measurements may be highly precise but not necessary accurate.

Resolution

When the input quantity is increased from some arbitrary nonzero value, the output of a sensor may not change until a certain input increment is exceeded. Accordingly, resolution is defined as the smallest distinguishable input change that can be detected with certainty.

Reproducibility

Reproducibility describes how close the measurements are when the same input is measured repeatedly over time. When the range of measurements is small, the reproducibility is high. For example, a temperature sensor may have a reproducibility of 0.1C for a measurement range of 20 C to 80 C. Note that reproducibility can vary depending on the measurement range. In other words, readings may be highly reproducible over one range and less reproducible over a different operating range.

Offset

Offset refers to the output value when the input is zero, as illustrated in Figure 10.1.

Linearity

Linearity is a measure of the maximum deviation of any reading from a straight calibration line. The calibration line is typically defined by the least-square regression fit of the input versus output relationship. Typically, sensor linearity is expressed as either a percent of the actual reading or a percent of the full-scale reading.

The conversion of an unknown quantity to a scaled output reading by a sensor is most convenient if the input-output calibration equation follows a linear relationship. This simplifies the measurement, since we can multiply the measurement of any input value by a constant factor rather than using a “lookup table” to find a different multiplication factor that depends on the input quantity when the calibration equation follows a nonlinear relation. Note that although a linear response is sometimes desired, accurate measurements are possible even if the response is nonlinear as long as the input-output relation is fully characterized.

Response Time

The response time indicates the time it takes a sensor to reach a certain percent (e.g., 95 percent) of its final steady-state value when the input is changed. For example, it may take 20 seconds for a temperature sensor to reach 95 percent of its maximum value when a change in temperature of 1C is measured. Ideally, a short response time indicates the ability of a sensor to respond quickly to changes in input quantities.

Drift

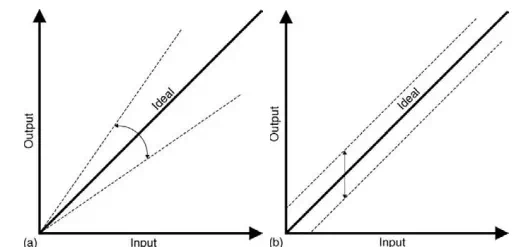

Drift refers to the change in sensor reading when the input remains constant. Drift can be quantified by running multiple calibration tests over time and determining the corresponding changes in the intercept and slope of the calibration line. Sometimes, the input-output relation may vary over time or may depend on another independent variable that can also change the output reading. This can lead to a zero (or offset) drift or a sensitivity drift, as illustrated in Figure 10.2. To determine zero drift, the input is held at zero while the output reading is recorded. For example, the output of a pressure transducer may depend not only on pressure but also on temperature. Therefore, variations in temperature can produce changes in output readings even if the input pressure remains zero. Sensitivity drift may be found by measuring changes in output readings for different nonzero constant inputs. For example, for a pressure transducer, repeating the measurements over a range of temperatures will reveal how much the slope of the input-output calibration line varies with temperature. In practice, both zero

FIGURE 10.2 Changes in input versus output response caused by (a) sensitivity errors and (b) offset errors.

and sensitivity drifts specify the total error due to drift. Knowing the values of these drifts can help to compensate and correct sensor readings.

Hysteresis

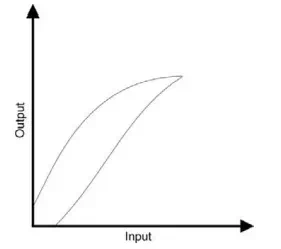

In some sensors, the input-output characteristic follows a different nonlinear trend, depending on whether the input quantity increases or decreases, as illustrated in Figure 10.3. For example, a certain pressure gauge may produce a different output voltage when the input pressure varies from zero to full scale and then back to zero. When the measurement is not perfectly reversible, the sensor is said to exhibit hysteresis. If a sensor exhibits hysteresis, the input-output relation is not unique but depends on the direction change in the input quantity.

The following sections will examine the operation principles of different types of biomedical sensors, including examples of invasive and non-invasive sensors for measuring biopotentials and other physical and biochemical variables encountered in different clinical and research applications.

FIGURE 10.3 Input versus output response of a sensor with hysteresis.

Comments are closed.